In 2026, customer service no longer follows the traditional “I fill out a form and wait for a call back” model. Expectations have shifted: users now want fast answers, personalized conversations, self-service when they choose, and a seamless omnichannel experience that stays consistent across every interaction.

And to meet these expectations, humans alone are no longer enough to handle the volume and complexity of requests. Not because advisors are doing a worse job quite the opposite. But because they’re expected to respond faster, across more channels, to more requests, with greater personalization… while still maintaining the same level of quality.

Let’s take a very simple example, one that many customers already experience in real life:

It’s 3 a.m., and a customer is facing a complex issue. They’re not looking for a link to the FAQ or a self-service journey that goes in circles. They want a useful answer, in their specific context, and most importantly, a solution.

What still felt out of reach five years ago is now achievable. A conversational AI agent can now understand the request, retain context, respond in a personalized way, and when needed trigger the right action or hand off to a human advisor at the right moment.

Conversational AI is no longer a “nice-to-try” gadget. It’s becoming a critical building block of modern customer service. Many companies have already integrated it into their customer journeys, so the challenge is to start now to avoid falling behind while ensuring a fast rollout without compromising quality.

People might assume that phone contact is declining as customer touchpoints become increasingly digital. Yet it remains a core channel across many journeys.

Why? Because voice still checks three boxes that pure digital doesn’t always replace:

Many field insights, including feedback shared during the VML & CASDEN webinar, confirm that voice (by phone or directly on the web) remains a preferred channel, especially for the most sensitive situations.

What’s changing in 2025-2026 is that AI is finally delivering real value on voice, where legacy systems (rigid IVRs, endless menus, cascading transfers) have long generated frustration. Voice AI isn’t replacing the voice channel, it’s addressing its historical limitations. It can better understand intent within the first seconds, route callers to the right journey without unnecessary repetition, and resolve more requests without adding friction.

The goal is to preserve the strengths of voice (immediacy, reassurance, the ability to handle complexity) while removing what undermines the experience.

In 2026, AI is becoming embedded in customer service journeys, but it doesn’t have unanimous support and that’s normal. The more visible the technology becomes, the more trust turns into a central issue, for both companies and end customers.

On the customer side, several concerns come up repeatedly such as the fear of dealing with an unfair or inaccurate AI, especially due to algorithmic bias, which can lead to inappropriate or even discriminatory responses. AI can make mistakes, so how can customers be sure they’re getting the best answer? Transparency has also become a strong expectation: customers want to know whether they’re speaking to an AI, and not feel like the company is “hiding” who they’re interacting with. Many also wonder what is recorded or stored, how their data is used, and whether the company complies with the GDPR and the AI Act.

On the company side, hesitations are just as significant. First, there’s the fear of integrating an AI that responds poorly, hallucinates, or delivers awkward answers, something that can damage brand image within hours. There’s also the regulatory and legal pressure, with GDPR and AI Act compliance requirements, and exposure to penalties if governance isn’t properly managed.

Another on-the-ground reality must be considered: not all customers have the same level of digital maturity. Some audiences are still uncomfortable online and with AI tools, and they expect more traditional, simple, and reassuring journeys.

So the challenge isn’t simply to add AI into customer journeys, it’s to identify what may slow adoption, and to build these watchpoints into the design from the start to avoid rejection. Learn more about how to build a requirements document for a customer service AI agent in our white paper.

With the right approach, AI integration is not only possible, it can also strengthen trust rather than weaken it. This requires a trusted AI approach, with a clear framework, strong governance, and explicit usage rules. It also means putting quality-control mechanisms in place, for example through LLM as a Judge, to continuously assess the relevance, compliance, and reliability of responses. This relies on three key evaluation criteria: resolution, satisfaction, and compliance.

This method makes it possible to continuously improve response quality and strengthen transparency. With this analysis model, the AI quickly identifies areas for improvement and ensures that answers remain aligned with the reference documents. At DialOnce, this evaluation framework translates into tangible results: a 91.7% resolution rate, an average satisfaction score of 3.9/5, and a 99.6% compliance rate. This real-world implementation of LLM as a Judge shows that well-governed AI can combine efficiency, reliability, and consistency.

And above all, it’s not about forcing a single channel. Some customers want to be autonomous, while others need immediate reassurance. The goal is to orchestrate a journey that provides the right level of support at the right time with a smooth escalation to a human advisor when needed, and clear alternatives for audiences who are less comfortable with digital.

For a long time, many companies tried to handle growing demand with traditional chatbots, often built on rigid scenarios and decision trees. The goal was to encourage self-care (autonomous resolution), route customers faster, and keep advisors focused on higher-value requests.

The problem is that these chatbots were designed for relatively simple, linear, and predictable journeys. But in 2026, requests are more diverse, more contextual and above all, more urgent.

A static FAQ is useful as long as the customer asks the exact expected question and the answer is up to date. Contact forms work as long as the customer is willing to wait and doesn’t need an immediate response. IVRs can route requests, but they quickly become frustrating as soon as the journey doesn’t match the proposed menu. As for script-based chatbots, they create the illusion of a conversation, but they get stuck as soon as the wording falls outside the predefined framework.

In practice, these solutions quickly reach their limits because they rely on a multiple-choice journey logic, whereas customers expect a real understanding of their need.

When these systems fail to meet the customer’s needs, the consequences are fairly consistent.

First, there is a drop in satisfaction, because customers feel like they are wasting time or going in circles. Then many end up bypassing the journey: they call back, insist, look for another channel, or go straight to a human advisor, often with frustration already building up. This results in overloading advisors. Not only because the requests end up reaching them anyway, but also because they often arrive in a more complex and degraded state. The customer has already tried several options, is losing patience, and the advisor has to restart the context from scratch.

In the end, there is a very visible gap between the digital promise, “simple, fast, and self-service” and the reality experienced by the customer.

This is often where confusion still exists. Many customers and sometimes even companies still have the 2018-2020 chatbot in mind: a tool that asks a few questions, offers two buttons, and gets stuck as soon as you step outside the script.

A traditional scripted chatbot relies on a fixed decision tree. It works as long as the customer follows the expected path. As soon as the wording changes, it misunderstands, gives an irrelevant answer, or sends the user back to the start. This creates loops, dead ends, and a quickly frustrating experience. Most importantly, it has no contextual memory, it can’t pick up and continue a conversation the way a human advisor would.

The conversational AI agent of 2025-2026 changes the underlying approach. It moves from a decision-tree model to true natural language understanding.

NLP (Natural Language Processing) enables AI to analyze a sentence as it is expressed, without requiring a specific syntax. LLMs (Large Language Models) bring a more nuanced understanding of meaning, context, and intent. And generative AI makes it possible to produce a written, contextualized response that remains consistent with the information available. It can also handle multiple languages, continuously improve through interactions and corrections, and integrate across channels without breaking journey continuity.

Customers no longer feel like they are talking to a menu, they feel like they are getting an answer that adapts to their situation.

According to Insee, in 2024, 10% of French companies with 10 employees or more reported using at least one artificial intelligence technology, compared to 6% in 2023. 33% of companies with 250 employees or more were using AI, compared to 9% of organizations with fewer than 50 employees. These gaps show that AI is already a reality for large enterprises and that adoption is now accelerating across the mid-market, with increasingly concrete use cases, especially in customer journeys.

1001 Vies Habitat chose DialOnce to structure its contact journeys with an AI agent dedicated to social housing providers, designed to go beyond a simple chatbot. Its integration improved accessibility for tenants, enabled requests to be pre-qualified before being passed on to internal teams, and strengthened service quality across the entire tenant journey.

"Thanks to DialOnce’s conversational agent, we have not only improved the accessibility and responsiveness of our service, but also optimized the management of incoming requests, allowing our tenants to get instant answers to their queries, 24/7. This project has been a real driver for enhancing the customer experience with AI while keeping our operational costs under control."

Maud Flory-Boudet

Head of Multichannel Customer Relations, 1001 Vies Habitat

If conversational AI is becoming a must-have in 2026, it’s not because it’s trendy. It’s because it enables personalization without adding complexity, helps absorb higher volumes without compromising quality, and ensures a seamless experience across every channel.

In 2026, personalization is a standard expectation in customer service journeys. A well-integrated conversational AI can adapt to the customer contacting support by taking their context and history into account. It can access relevant information in real time (case file, contract, last interaction, request status) and adjust its tone, level of detail, or vocabulary depending on the customer profile.

This approach has a direct impact on the experience: customers no longer need to repeat themselves, they feel recognized, and resolution becomes faster. It improves customer satisfaction by reducing one of the most common pain points in customer service having to repeat information that has already been provided.

However, personalization and autonomy should not come at the expense of journey clarity. Customers can feel lost if escalation to a human advisor is poorly handled, or if the switch to AI feels too abrupt. This is especially important for audiences who are less comfortable with digital tools. Some customers need more guided support, or a more traditional alternative. The best approach is to move step by step, with clear communication and simple cues throughout the journey. It also means supporting customers, explaining the role of AI, and making it easy for them to switch to a more traditional option whenever needed.

Data: the fuel behind personalization

Personalization only works if data is high-quality, compliant, and regularly updated.

If reference documents are incomplete, outdated, or inconsistent, the AI will inevitably generate approximate answers. And in a customer journey, an approximate answer is costly: it creates frustration, wastes time, and weakens trust.

This scenario happens often: a company deploys conversational AI with a knowledge base that isn’t kept up to date. The agent then starts hallucinating, mixing up rules, or giving outdated information. And in the end, customers don’t just remember that the AI made a mistake, they mainly remember that the company is not reliable.

Conversational AI is becoming essential because it addresses a challenge every support team knows well: doing more, faster without continuously hiring.

By automating repetitive tasks (recurring questions, tracking updates, appointment scheduling, first-level requests), it helps increase the volume of requests handled while reducing pressure on teams.

It also improves processing speed, which directly impacts costs and leads to shorter wait times, fewer drop-offs, and fewer follow-ups. For businesses, this translates into an easier-to-measure and often faster ROI. Beyond support, conversational AI can also contribute to lead generation by qualifying requests, guiding users toward the right offer, or triggering an appointment at the right time.

A significant share of requests comes in outside business hours, and in 2026, customers expect an immediate response, not an invitation to contact you tomorrow.

By handling part of the demand at any time, the AI agent significantly reduces wait times, limits drop-offs, and improves the customer experience, while helping maintain stable conversion rates even when teams are unavailable. As a result, AHT (average handling time) decreases by around 20% on average, thanks to automated answers and faster routing.

But availability alone isn’t enough. Even if an AI agent responds 24/7, the experience remains frustrating if customers have to start from scratch every time they switch channels.

In 2026, expectations are therefore twofold: customers want a fast answer, but above all they want to move naturally between chat, messaging, and voice while keeping the context.

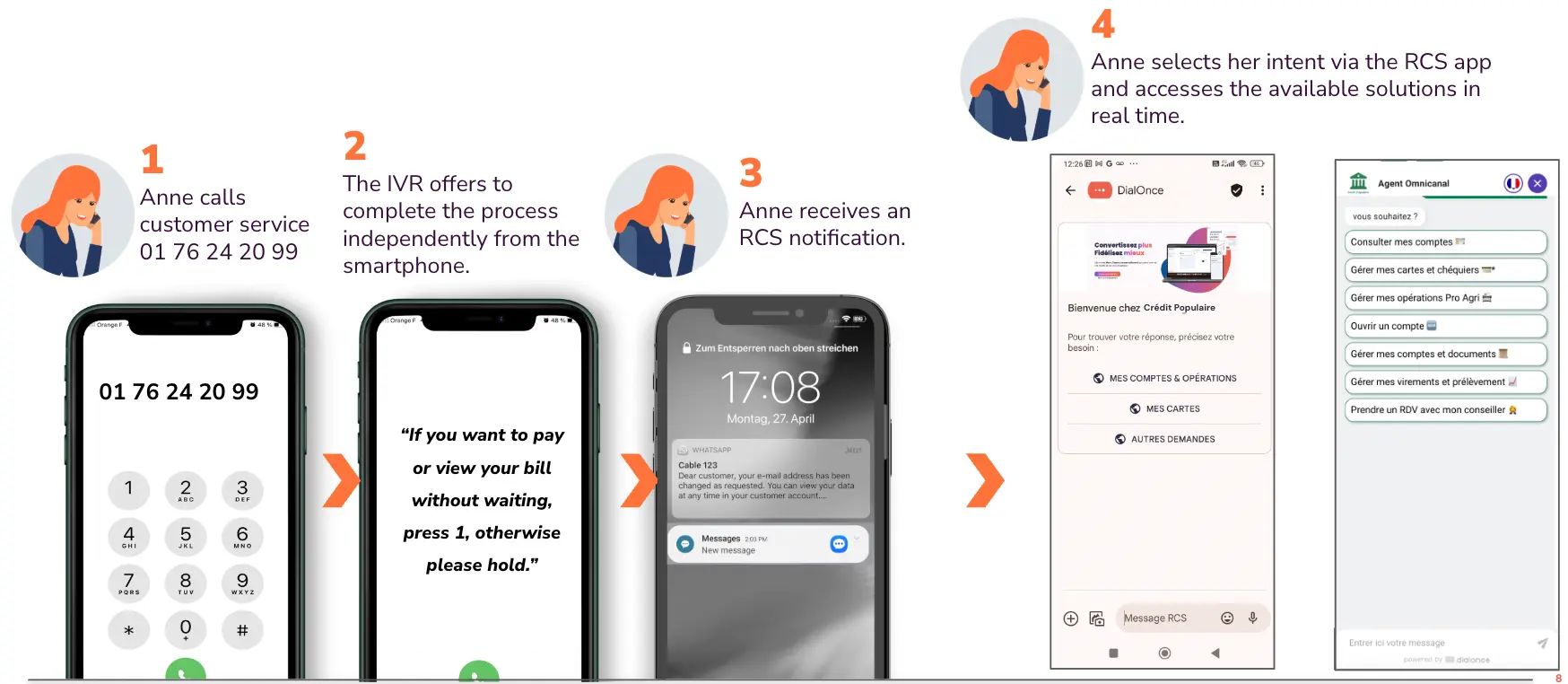

This is exactly what RCS (Rich Communication Services) offers. It enables the delivery of rich multimedia content (images, videos, documents), the addition of interactive buttons, and more granular message contextualization, all without requiring a third-party app, since it is natively integrated into messaging apps on Android and iOS. As a result, the journey becomes smoother and customers avoid having to explain their situation again at every step.

"Businesses aim to better control and qualify incoming flows, especially by creating bridges between channels to retain knowledge of the history of behavior and interactions with customers from one channel to another, in order to personalize communication and accelerate processing times."

Raphael Gourévitch

Senior Consulting Manager, SIA

Conversational AI is becoming essential because it improves the perceived quality of service.

First, responses become more accurate and consistent. Customers receive the same level of information quality regardless of the channel they use or when they contact the company, reducing experience gaps between two advisors or two touchpoints.

Second, the relationship becomes more proactive. The AI agent can directly suggest the most useful next action (a link, a document, a step to validate, an appointment) and guide customers faster toward the right solution. Customers move through their journey more quickly, with less effort and fewer back-and-forth interactions.

In advisor-customer interactions, AI can also act as real-time assistance, suggesting relevant information or next-best actions to trigger.

This is exactly the approach behind DialOnce’s Augmented advisor: an AI agent that contextualizes each request, generates automatic summaries, recommends predictive actions, and integrates easily with your tools thanks to API compatibility.

This shift transforms the advisor’s role, gradually moving from answering questions to solving complex problems.

However, this shift doesn’t happen without support and change management. In the field, some teams may express resistance, often driven by a simple and legitimate concern: will AI replace me?

Another key factor in team adoption is upskilling. Without training, AI can be misused or misunderstood, quickly creating frustration, both for advisors and for customers. The goal isn’t to teach AI in a purely theoretical way, but to help teams adopt it in their day-to-day work, understand its limits, and know when to take back control.

How can you secure team adoption of conversational AI?

Success starts with support, collaboration, and co-design with advisors. When teams are involved from the beginning, they become stakeholders in the project, and AI is seen as support, not as a constraint.

It also depends on the choice of solution. High-performing customer service AI isn’t just a technology, it’s a coherent end-to-end system. You need a robust solution, backed by a strong domain team with real customer service expertise. At DialOnce, this expertise is built on more than 10 years of experience.

And that’s the key point: AI doesn’t replace humans. It allows them to focus on what they do best: understand, build relationships, and solve complex situations.

Accessibility is no longer optional. It is now part of a more demanding regulatory framework, driven in particular by the European Accessibility Directive and the RGAA (General Accessibility Improvement Framework), with potential penalties in case of non-compliance.

For many organizations, conversational AI makes it possible to better serve audiences who previously faced overly complex or poorly adapted journeys. For people with visual impairments, voice through a vocal AI agent, powered by ASR (Automatic Speech Recognition), can provide a simpler and more natural way to access information. For deaf or hard-of-hearing users, enriched text-based interactions combined with features such as TTS (Text-to-Speech) and transcription improve understanding and fluidity. For older users, guided journeys, clearer messaging, and constant availability help reduce friction.

And this goes far beyond disability. For non-French speakers, real-time translation and multilingual support remove a common barrier to accessing service, making interactions clearer and more reassuring.

Beyond compliance, there is also an often underestimated benefit: well-designed accessibility generally improves the experience for all customers, not just specific audiences, by making journeys simpler, easier to follow, and more efficient.

In 2026, we’re no longer talking only about conversational AI, we’re talking about a fundamental shift in the customer service model. The companies that stay ahead are those that anticipate technological changes, but above all those that understand how these evolutions will reshape customer expectations and the way teams are organized.

As an industry player, here are the key trends we see emerging for 2026-2027, trends that, in our view, will shape the next wave of customer service transformation:

One of the strongest signals is the growing ubiquity of agents. They are becoming mainstream on the consumer side because they make it easier to access information and take action. They are also being deployed across businesses, as they enable teams to handle more requests, faster while maintaining a high level of consistency.

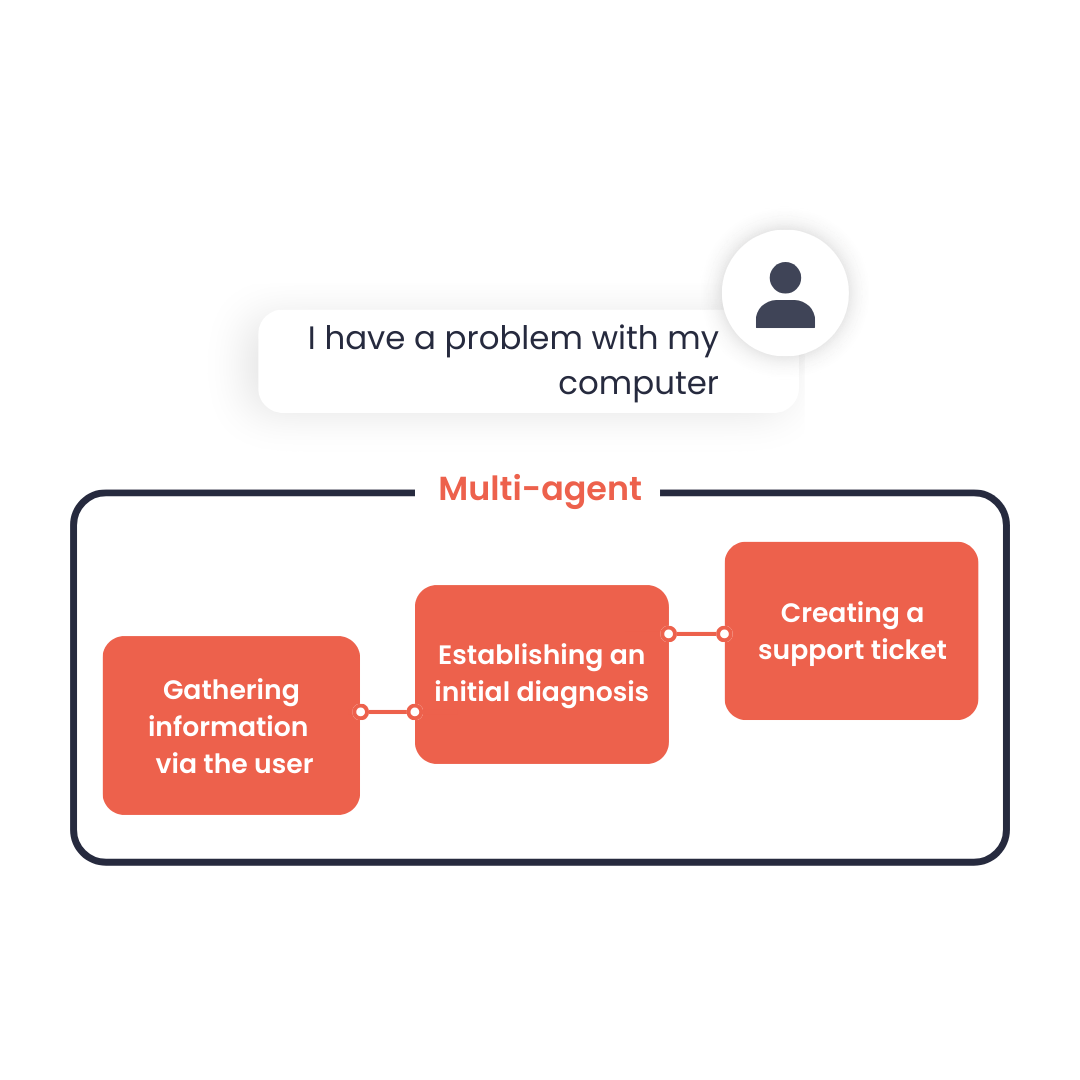

What is starting to change is how these agents will be organized. We’re no longer talking about a single agent answering questions, but about environments where multiple agents collaborate, each with its own role, data, and capabilities. This is what we’re beginning to see with A2A (agent-to-agent) approaches, MAS (multi-agent systems), and agent-first MVP (minimum viable product) strategies that speed up time-to-market.

With this approach, interconnection becomes easier. Journeys can be built faster, scenarios evolve more smoothly, and companies can deploy intelligent building blocks without starting from scratch at every iteration.

This creates major opportunities for early movers, especially those that can become pioneers by offering customer service agents on marketplaces, capable of answering, qualifying, routing, and triggering actions, while staying aligned with business rules.

But this dynamic also introduces a new challenge: the more orchestration becomes standardized, the easier it becomes to replicate. Differentiation will therefore not come solely from having an agent, but from the quality of its integration, the robustness of its guardrails, and its ability to deliver a truly seamless experience.

This shift is also accompanied by intense market pressure. The price war is accelerating, driven by the falling cost of LLMs thanks to optimization, and by new players making these technologies more accessible, such as AWS. At the same time, we’re seeing a wave of consolidation: major vendors are expanding their offerings, while smaller players that miss the turn risk disappearing, being acquired, or becoming highly specialized.

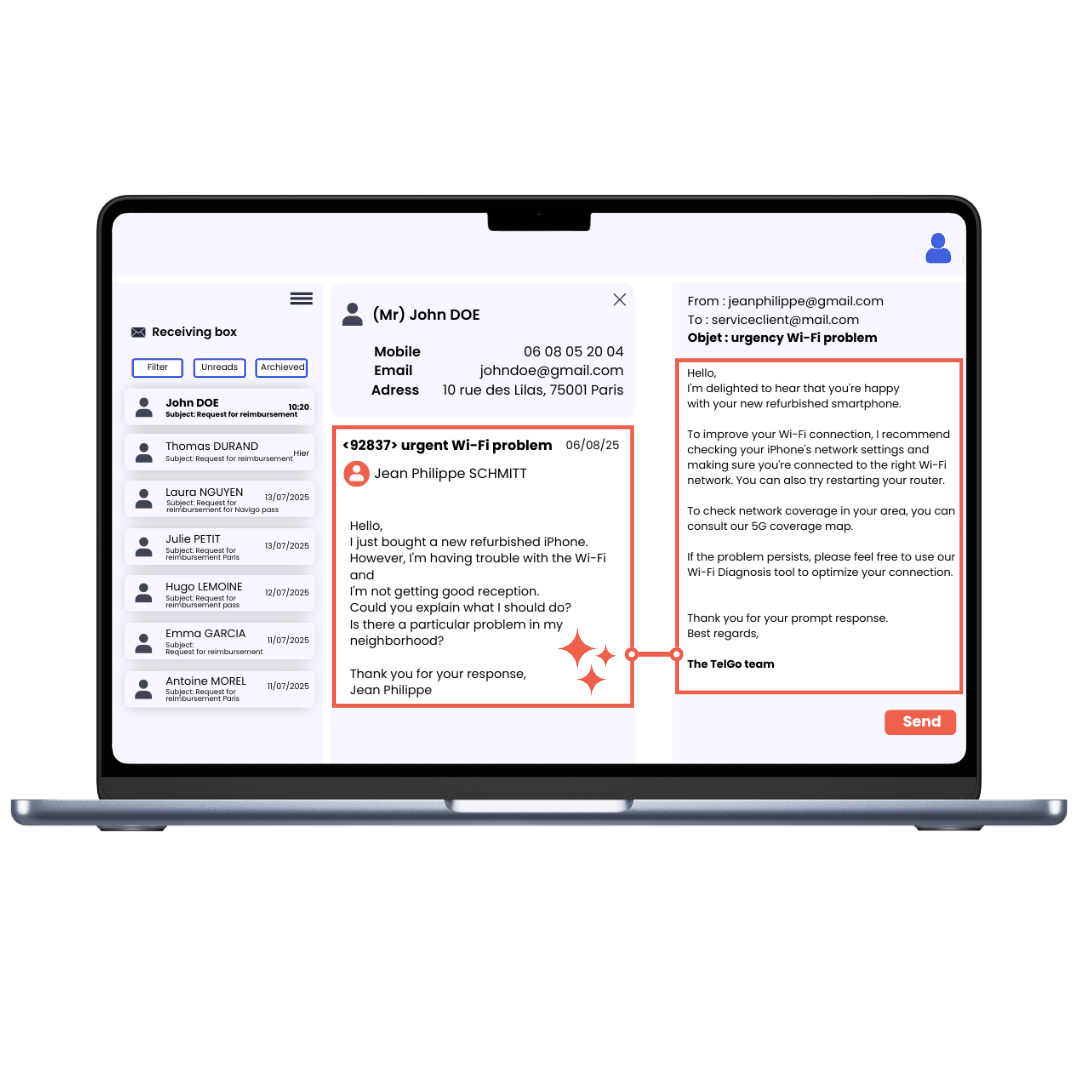

After learning to understand intent, AI is starting in 2026 to integrate a key dimension of customer service: the emotional state in which a request is expressed.

This approach relies on sentiment analysis. Based on tone, word choice, or the rhythm of an interaction, AI will begin detecting frustration, urgency, or on the contrary, satisfaction. Once the signal is identified, the agent can adjust its behavior, rephrase when needed, adapt its tone, and avoid responses that could increase customer frustration.

This is also what will enable smarter escalation. When strong negative emotion is detected, routing to a human advisor can become a priority with the context already clarified. For this to work, integration with business data and tools is essential, as it helps the AI understand the customer’s real situation and the level of urgency involved.

Let’s take a concrete example. A tenant contacts customer service after receiving a payment reminder, even though they claim they already paid. They are annoyed, don’t understand what’s happening, and want an immediate solution. The AI agent detects tension in the message, checks the case status, asks only for the missing information if needed, then proposes a clear next step (send proof of payment, confirm it has been received, and provide a processing timeframe). And if the risk of escalation is high, it immediately transfers the request to a senior advisor with a summary of the situation and the information already collected, so the customer doesn’t have to repeat everything.

Between 2026 and 2027, the voice channel is expected to keep gaining importance in customer service journeys. Whenever there is urgency, complexity, or a need for reassurance, voice remains a particularly effective way to interact. In fact, our AI Barometer 2025, conducted in partnership with Kiamo, pointed in the same direction: in 2025, 72% of French people said they trust phone advisors, a slight increase compared to 2023 (70%).

What we anticipate is a gradual expansion of these use cases beyond traditional phone calls. Voice will become more prominent on mobile, on desktop, and through connected interfaces, because it reduces customer effort and speeds up access to an answer.

The signals are moving in that direction, with the rise of voice modes and the wider adoption of speech-to-speech technologies, driven in particular by players such as Illuin and Cognigy.

This means voice should become a natural part of omnichannel journeys, rather than remaining a separate channel. And for businesses, the main challenge will be to modernize the voice experience by avoiding rigid flows, context-less transfers, and repetitions, which still rank among the biggest pain points today.

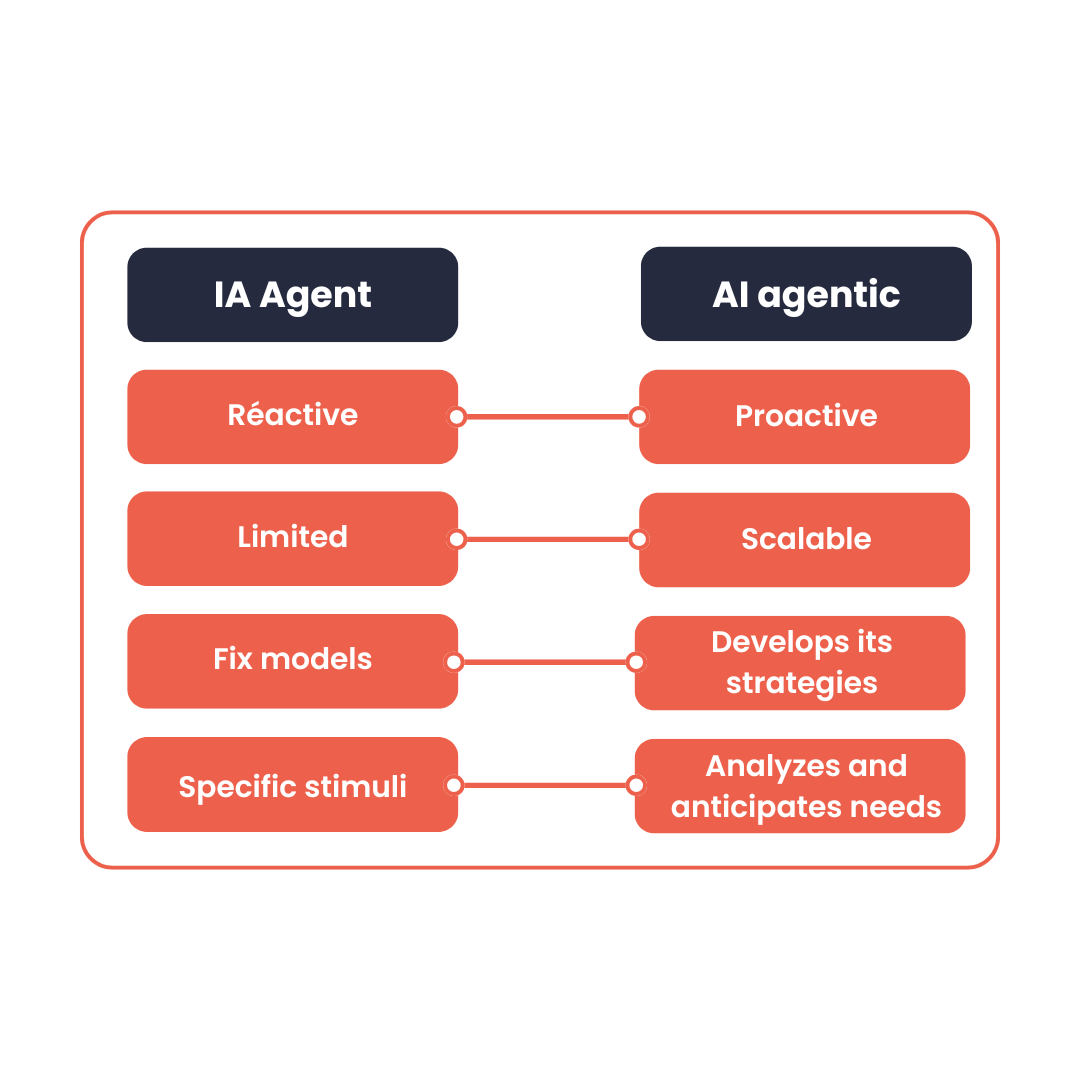

In 2026, we’re no longer talking only about AI that interacts with customers, but about agents that can reason, plan, and take action, with a clear goal: automate more while improving resolution.

Step by step, agentic AI will become an unavoidable topic. The goal is to move from AI that simply answers questions to AI that can decide, plan, and execute actions to move a request forward.

In other words, the agent will no longer be limited to understanding a request and generating a response. It will be able to adapt, take initiative, and trigger a coherent sequence of actions to solve a problem, without requiring human intervention at every step.

This shift will also accelerate thanks to standards such as MCP (Model Context Protocol), which pave the way for stronger interoperability between AI agents. The goal is no longer to multiply isolated building blocks, but to create environments where agents can share context, coordinate, and act more seamlessly.

"The goal is no longer just to respond quickly, it’s to fully resolve the request end to end, with AI that can take action, verify, and complete the process, while remaining reliable and properly governed."

Charles Dunston

CEO, DialOnce

Another major trend for 2026-2027 is the comeback of custom (tailor-made) development. The more AI solutions become widespread, the more companies look to differentiate themselves, not through the technology itself, but through the experience they deliver, the smoothness of their journeys, and their ability to reflect real operational needs.

This trend is mainly driven by two factors. On one hand, open source makes certain building blocks more accessible and easier to adapt. On the other hand, AI-powered development assistants significantly reduce the time needed to prototype, test, and integrate custom features.

In practice, the “build vs. buy” question will quickly come back to the table. Buying is often the fastest way to move forward and secure a reliable foundation. But as soon as you need to adapt to specific journeys, tools, and business constraints, adding custom elements in a few key areas often becomes the best option, provided you clearly define what will need to be maintained and evolve over time.

In the coming months, we expect to see the acceleration of a verticalization (specialization) trend. General-purpose AI remains useful, but it quickly reaches its limits as soon as the topic becomes industry-specific, regulated, or highly contextual.

The shift is therefore moving toward more specialized AI, with fine-tuned models tailored to each sector, capable of embedding compliance logic, precise terminology, and truly operational use cases. This is particularly valuable in industries such as banking and insurance, where response quality and risk control are non-negotiable.

Market signals are pointing in the same direction. Investors are increasingly favoring vertical SaaS solutions, because they address specific needs more effectively and create stronger barriers to entry.

Overly generic AI can quickly lack nuance on industry-specific topics and reduce the quality of guidance. In contrast, specialized AI often makes the difference because it is more accurate, more reliable on compliance, and more precise in the terminology it uses.

It is also a strong product opportunity. By offering vertical solutions, vendors can strengthen their credibility and meet the expectations of industries where customer service is highly regulated with greater precision.

AI will gradually become embedded into everyday tools. We’re moving from standalone modules to a more native AI, directly built into CRMs, ERPs, and support platforms.

Each business application will be able to assist users, analyze information, suggest the most relevant next action, or automate certain steps, right where the work happens, at the right moment.

This is also what will accelerate adoption. Teams won’t need to switch tools or completely relearn how they work. AI will integrate into existing processes, delivering value progressively, rather than forcing a new environment or adding yet another layer to manage.

In 2026-2027, conversational AI will no longer be just a response channel. It will also become a far more actionable source of business insights, because it captures increasingly valuable data: real customer interactions.

Instead of navigating fragmented dashboards, teams will be able to query AI like an analyst, for example by asking questions such as: “What are the top five reasons for dissatisfaction this month?” or “What generates the most repeat contacts before resolution?” This kind of approach saves time, but above all helps teams move faster toward optimization actions.

What changes is AI’s ability to automatically detect trends and identify weak signals: a gradual rise in requests about a specific topic, recurring confusion around a journey step, or a growing pain point that isn’t yet visible in traditional KPIs. By analyzing all conversations, AI can surface early signs of degradation and help prioritize what needs to be improved.

Over time, it will become a true decision-support tool, not based on a sample, but on the analysis of all customer interactions, with a more granular view of root causes, impacts, and opportunities.

Frugality will continue to gain traction. AI will keep growing in power, but with two constraints to manage: energy and cost.

On the energy side, the carbon footprint of large language models (LLMs) is becoming increasingly hard to ignore. On the cost side, LLM usage can quickly become expensive, especially as interaction volumes scale.

That’s why we’re seeing more frugal approaches emerge. Optimized models, using techniques such as quantization (reducing weight precision) or pruning (removing unnecessary parameters), will help lower consumption and improve speed. Local inference (running the model to generate answers) is also progressing through edge computing approaches, enabling some requests to be handled closer to the user or the infrastructure, without relying systematically on the cloud.

In practice, this will push companies to choose between a highly powerful model and a lighter one, depending on the real need. That’s also why Small Language Models (SLMs) are gaining momentum. Lighter than LLMs, they follow a different logic, with lower costs, reduced latency, and improved energy efficiency.

Technological sovereignty is set to become a more structuring criterion in AI projects. The combination of the GDPR and the European AI Act is raising requirements around data, how it is processed, and accountability.

In this context, European digital sovereignty adds an additional constraint. Some organizations are already limiting the use of US-based solutions, even when they rely on European partnerships and this trend is expected to grow stronger.

For French and European companies, this means choosing technology solutions and hosting options that meet these requirements, while still maintaining the ability to deploy quickly.

But it’s also an opportunity. “Made in France” or “Made in EU” AI can become a strong commercial differentiator, especially in sensitive and highly regulated industries.

Securing AI usage will gradually become a prerequisite. The more conversational AI systems are connected to tools and data, the more exposed they become to new types of risk. These risks are widely documented, notably through work published by ANSSI and the CNIL on the use cases and vulnerabilities of conversational AI.

Key issues to anticipate include prompt injection (attempts to manipulate the AI), risks of sensitive data leakage, and certain adversarial attacks. The challenge is therefore to be able to trust AI, not only in terms of performance, but also in terms of explainability, bias management, and information protection. DialOnce has chosen the LLM as a Judge approach: an evaluation model that continuously checks response quality, measures resolution and satisfaction, and ensures content remains aligned with the reference documents.

In 2026, conversational AI has nothing in common with the chatbots of 2020. It understands natural language, maintains context, integrates with business tools, and resolves requests far more smoothly.

Most importantly, it meets new demands. Customers now expect immediate answers, volumes are increasing, and teams must keep up with limited resources. Conversational AI now delivers real value in terms of productivity, operational efficiency, customer satisfaction, and ROI.

And this is only the beginning. In 2026-2027, agentic, multimodal, and proactive approaches will continue to reshape customer journeys, with AI systems that progressively become better not only at understanding, but also at acting and anticipating.

The earlier companies adopt AI, the more they can test, refine, and improve gradually without having to overhaul everything overnight. And that’s what will make the difference in the years ahead. Companies that start now will deliver a smoother customer service experience and give themselves the means to secure a more sustainable future.